Last week i shared an article about Power automate flow integration with Dynamics 365 FinOps to export data by using Data management package REST API, and we have seen the 2 different options that support file-based integration scenarios that can be used as data batch API in D365 FinOps, when each API can be considered based on multiple decision points.

see the previous article: Data export via Power automate and Data management package API

Today’s scenario : Data export through Azure LogicApps and Business events

In this article we will continue the data integration serie with D365FinOps to presents the differents methods, integration tools that can be used to connect FinOps apps with other applications.

Today’s goal is to present another way to create a continuous data export flow from D365 FinOps to the blob storage by using Business events and Azure Logic Apps combination.

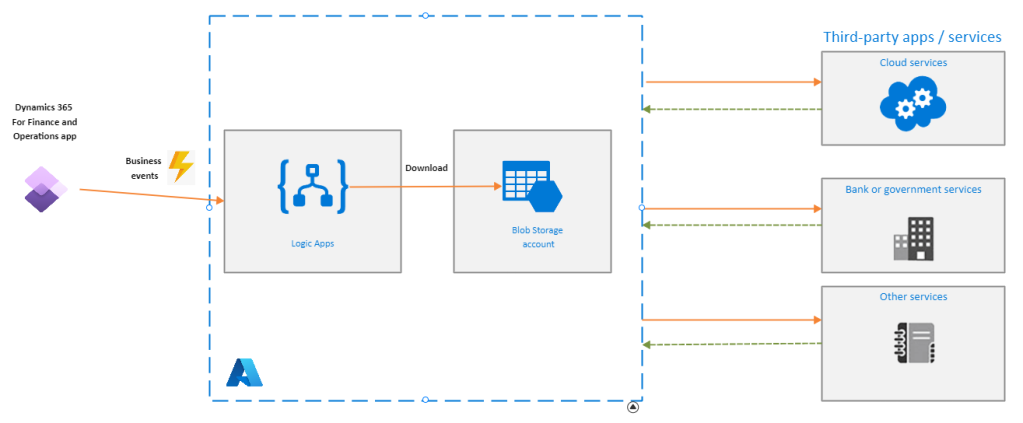

In this scenario we will use the following elements:

- Dynamics 365 For Finance and Operations

- Data management project

- Data Management project / Export + Integration type project

- We will use the reccuring data export option to enable the continuous exchange flow of documents or files between D365FinOps and third-party applications.

- Business events

- We will create a custom business event which will be used as a trigger in the Logic App workflow.

- Data management project

- Azure Logic Apps

- To develop the automated cloud flow that will communicate with the D365FinOps app and call the business event to trigger the next actions.

- The workflow actions will retrieve the last executed bacth job of the created export project and push the generated CSV file in the Azure blob storage account after each successful export.

- Azure Blob storage account

- The final destination of the exported CSV files from D365FinOps.

- Ideal to store massive amounts, long-term, and flexible data

- Recommended for custom integration business scenarios between Dynamics 365 FinOps app and other applications, like (bank files storage, Procurement, exchange of files and documents with external applications … etc)

Dynamics 365 FinOps, and the need for continuous data integration

One of the coolest things that i love in D365 FinOps is it’s capability to be easily integrated through Power platform tools and Azure services to communicate with external applications.

During my last DynamicsCon presentation i mentionned that : « The ERP is not an isolated tool, and it must be fully integrated to communicate easily with external applications », because i think always the idea of having an ERP integration strategy is to go beyond offering technical deployable tools but more than this is to create a system with high added value, when the benefits of achieving this will help lead us to have :

A System with high added value through shared data : Azure Blob storage as a solution

One of the main faced challenges when you don’t have implemented data export / import integration patterns, is your system productivity will be limited with multiple Data silos, when each department store their data for long years in a different systems, which can be on-premises data silos, the time when newer applications fully based in the cloud.

The results here is having : Redundancy, innefficiency, and inaccurate data system.

An effective and automated processes with Azure LogicApps

Adopting the classic operating model without a clear data integration strategy, lead us to have a system with a lot manual processes, to move information between D365FinOps app and other systems.

I can mention Dual-write option as a great out-of-box solution for this kind of problems, that provides real-time interaction between Dynamics 365 FinOps apps and other Dynamics 365 products via a common Dataverse, fully connetced to Power Platform tools or even other Azure Services like Azure LogicApps that we are going to use in this article.

A Flexible system with Business events

Disconnected flows and data silos mean manual data cleaning, manual data verification and manual integration, lead us to have a delayed system in term of exchanged data.

Business events provide a solution to lets external systems recieve notifications from D365 FinOps apps. in this way the targeted systems can perform business actions when a business events occur in the D365FinOps apps.

Create the custom Business event to be consumed after each successful data export

Before creating the data export project, we will develop the custom business event that will be used as the trigger event / action later in the LogicApp worfklow.

This business event will support the scenario of a successful data export. In other words, after each successful or Partially successful execution data export of the data entity, we will send a business event to Azure LogicApps.

The intent of the custom business event

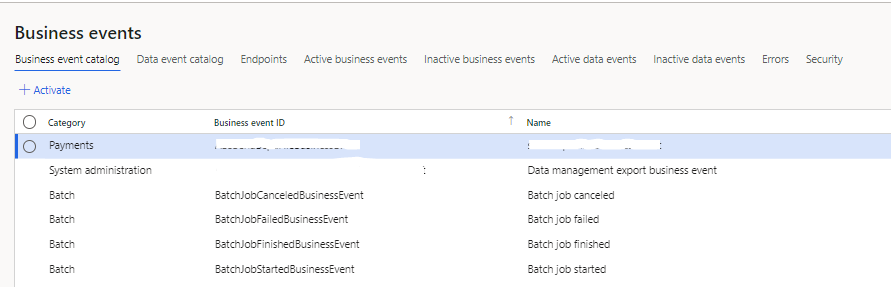

Business events are implemented in some business processes out of the box, they include workflow and non-workflow business events.

In this scenario, we are going to implement a custom business event to achieve the goal, when the reason for capturing our business event is to be called as a trigger action outside D365FinOps.

As any business event, the process for implementing a custom one is as follow:

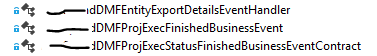

- Contract class : Extends the BusinessEventsContract class. It defines the payload of the business event and allows for population of the contract at runtime

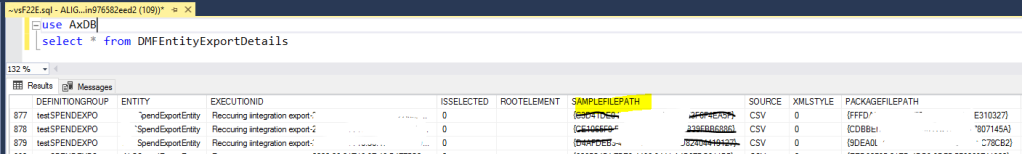

- We are going to use DMFEntityExportDetails table to populate the declared contract variables.

- DMFEntityExportDetails used to get the Azure Blob URL.

- Business event class : This class extends the BusinessEventsBase class. It supports constructing the business event, building the payload, and sending the business event.

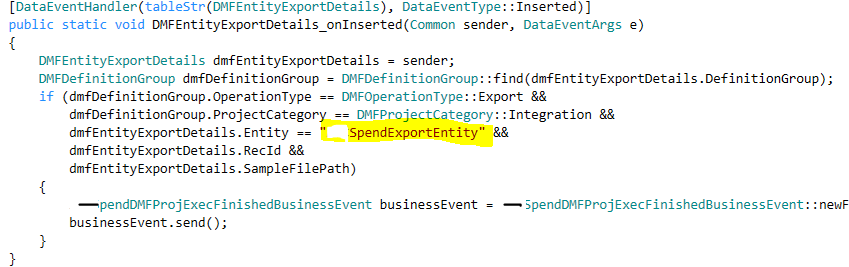

- Code to send to the event : This class represent the event itself, here we will call it via an event handler to initialize the contract.

- In this case we will send the event on the Inserted event handler.

You can find a good example of these business events classes in the following GitHub project.

Rebuild your business events catalog

Once your business events classes successfully added in your model. you have to rebuild the business events catalog via : Manage > Rebuild business events catalog.

Integration data management project in D365 FinOps

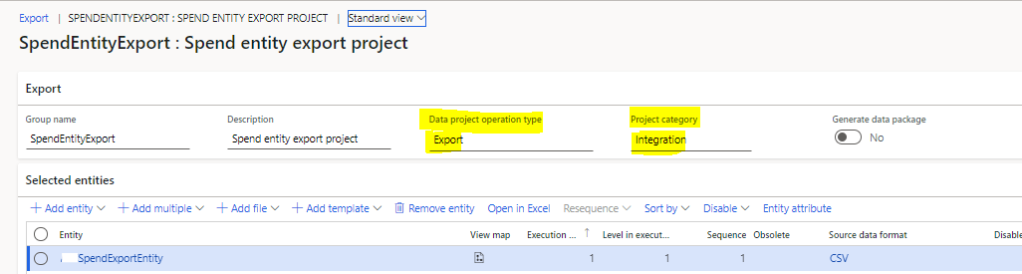

In this step we are going to create a new data export project, here i will export the spend custom data entity.

It’s essential that your data export project category to be Integration.

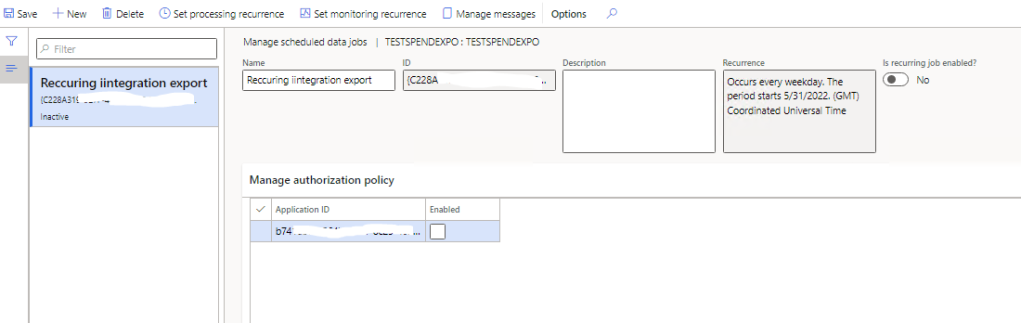

In this example i will activate the reccuring data job export, i’m setting up processing reccurence every weekday

Azure LogicApps to push D365FinOps exported data to the blob storage

The creation of Azure Logic Apps can be done via Portal Azure or Visual Studio.

In this article i’m going to create a new ARM (Azure Resource Group) template via Visual Studio, because i want to manage the version control of my Logic App and deploy it via Azure DevOps.

Based on an empty logic app template

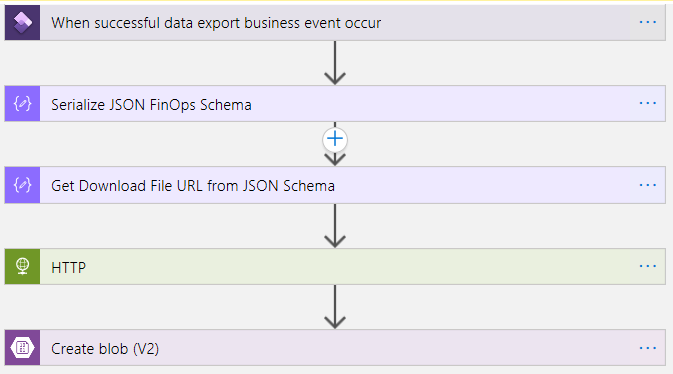

Now let’s create the LogicApp workflow and develop the appropriate actions, the goal here is to build an automated cloud flow triggered by our developed custom business event to push the spend csv files in the blob account.

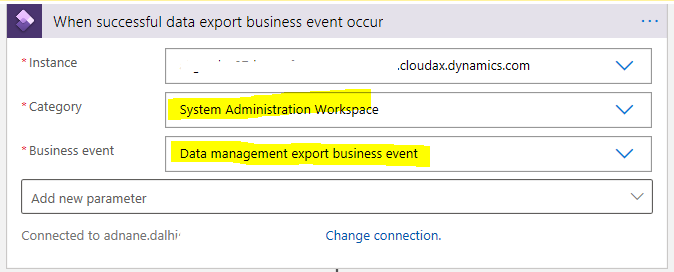

Trigger : When successful data export business event occurs

The connector can connect to any instance of Finance and Operations apps on the Azure Active Directory tenant.

The following information must be provided in the When a Business Event occurs trigger

- Category : The category of business events. i have added my cutsom business event under System administration category.

- Business event : Select the custom business event that the flow should be triggered from. if you can’t see your custom business event, try to write it manually.

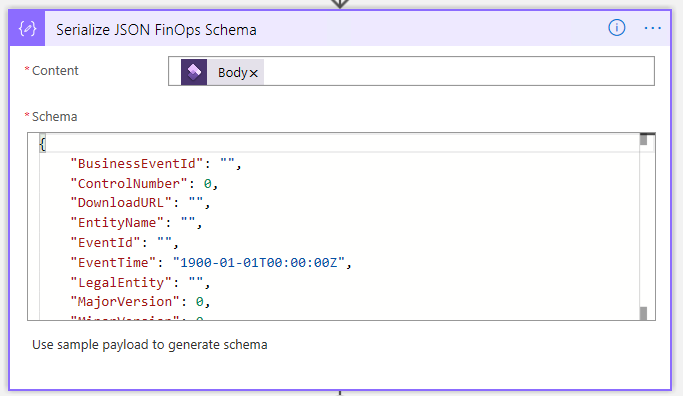

Action: Serialize JSON FinOps Schema

The trigger will generate the JSON payload format, it’s represent all data member fields we have already added in our business events, you can download the schema directly from D365FinOps Business event catalog form.

Action: Serialize JSON FinOps Schema

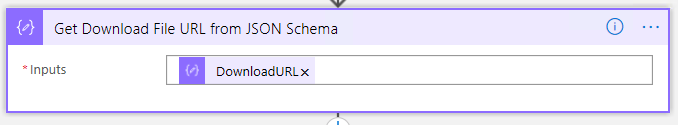

One of the main fields that we are interested in, is the URL generated from the JSON Schema. In In this step we are going to retrieve and save that file path.

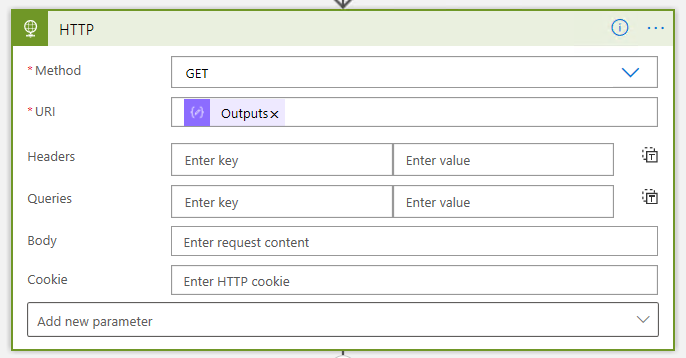

Action: HTTP Download

In this step we are going to add an HTTP GET request to download the file from the URL that was returned in the previous step.

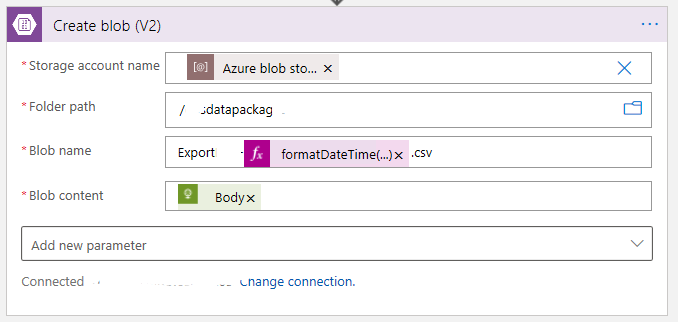

Action: Create the blob

The last is to create blob block and push to CSV file into a specific folder path.

If you enjoyed this post, I’d be very grateful if you’d help it spread by emailing it to a friend, or sharing it on Linkedin , Twitter or Facebook. Thank you !